Therefore, to identify the best settings for our unique use case, it is always a good idea to experiment with alternative loss functions and hyper-parameters. batch Cross Entropy Loss Numpy Implementation Cross Entropy Loss Numpy.

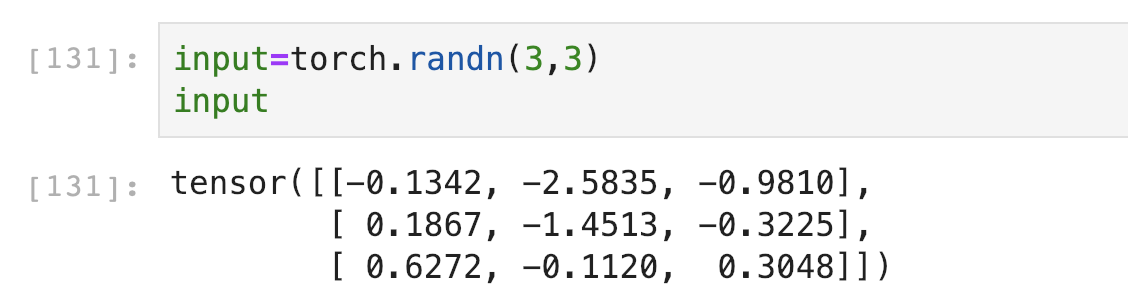

While cross-entropy loss is a strong and useful tool for deep learning model training, it's crucial to remember that it is only one of many possible loss functions and might not be the ideal option for all tasks or datasets. To summarize, cross-entropy loss is a popular loss function in deep learning and is very effective for classification tasks. Line 24: Finally, we print the manually computed loss. Line 21: We compute the cross-entropy loss manually by taking the negative log of the softmax probabilities for the target class indices, averaging over all samples, and negating the result. Line 18: We also print the computed softmax probabilities. the conventional definition of cross-entropy that you gave above. Line 15: We compute the softmax probabilities manually passing the input_data and dim=1 which means that the function will apply the softmax function along the second dimension of the input_data tensor. The issue is that pytorch’s CrossEntropyLoss doesn’t exactly match. The labels argument is the true label for the corresponding input data. The input_data argument is the predicted output of the model, which could be the output of the final layer before applying a softmax activation function. Line 9: The TF.cross_entropy() function takes two arguments: input_data and labels. 0) source This criterion computes the cross entropy loss between input. The tensor is of type LongTensor, which means that it contains integer values of 64-bit precision. For most PyTorch neural networks, you can use the built-in loss functions such. Line 6: We create a tensor called labels using the PyTorch library. Line 5: We define some sample input data and labels with the input data having 4 samples and 10 classes. Ret = smooth_loss.masked_select(~ignore_mask).Line 2: We also import torch.nn.functional with an alias TF. Ret = smooth_loss.sum() / weight.gather(0, target.masked_select(~ignore_mask).flatten()).sum() Therefore it expects as inputs a prediction of label probabilities and targets as ground. Equivalently you can formulate CrossEntropyLoss as a combination of LogSoftmax and. CrossEntropyLoss (x, y) : H (onehot (y), softmax (x)) Note that onehot is a function that takes an index y, and expands it into a one-hot vector. loss is normalized by the weights to be consistent with nll_loss_nd nn.CrossEntropyLoss is a loss function for discrete labeling tasks. The definition of CrossEntropyLoss in PyTorch is a combination of softmax and cross-entropy.

#Pytorch cross entropy loss code#

TODO: This code can path can be removed if #61309 is resolved crossentropyloss(): argument target (position 2) must be Tensor, not numpy.ndarray. Image Entropy Value Error: Image dimensions and neighborhood dimensions do not match. Starting at loss.py, I tracked the source code in PyTorch for the cross-entropy loss to loss.h but this just contains the following: struct TORCH_API CrossEntropyLossImpl : public Cloneable, 0.0) Pytorch: Dimensions for cross entropy is correct but somehow wrong for MSE 1. Where is the workhorse code that actually implements cross-entropy loss in the PyTorch codebase?

0 kommentar(er)

0 kommentar(er)